Web Scraping the MSA through Reverse Engineering¶

To get the data used in this module, we used an online website which had many databases based on Slavery in Maryland. We focused on Run-Away Advertisement to be the primary topic for this module. We used the data by extracting it from the database on the website, which is called "Web Scraping". Web Scraping a web page involves fetching it and extracting from it. Fetching is the downloading of a page (which a browser does when you view the page). Once fetched, then extraction can take place. This notebook shows how we got the data, which we used for the other notebooks in this module. In the notebook, we will explain the data transcription process, the Legacy of Slavery website (the website we got the data from), technical challenges which were involved with Web Scraping, and the code for our web scraper.

Data Transcription¶

To get this data, we used the online database which listed all Run-Away Slave Advertisements in Maryland.

The website we used to get this data is: http://slavery2.msa.maryland.gov/pages/Search.aspx

Slavery in Maryland lasted around 200 years from its beginnings in 1642. Most of the advertisements were posted on local Newspapers as well as advertisements posted on boards around the cities. Most of the data was transcribed by scanning local newspapers as well as scanning various advertisements posted on boards about run-away slaves. After the scanning process was finished, data was then entered into the database on the website.

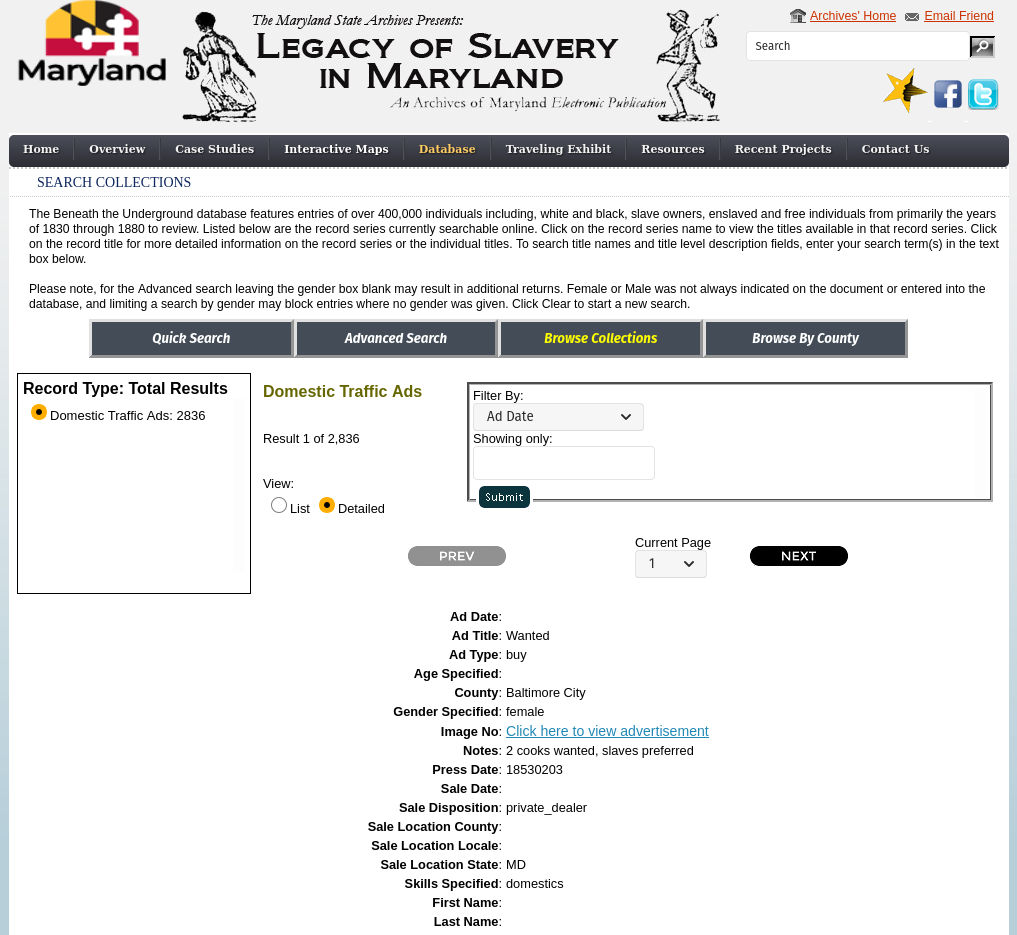

Here is a screenshot of the website we are web scraping from:

Legacy of Slavery Website¶

The Legacy of Slavery in Maryland website consists of databases, maps, case studies, records, and more about this topic. The database used for this module was published on this website. The website was designed for normal web browsing, meaning that any user could find and browse data on the website. For this module, we did not have access to any backend databases. Thus, we had to somehow extract the data from the website so that we could use it in this module. Since we wanted to work with only the Run-Away Slave Advertisement Data, we had to extract this data by Web Scraping. Web Scraping a web page involves fetching it and extracting from it. Fetching is the downloading of a page (which a browser does when you view the page). Once fetched, then extraction can take place. The content of a page may be parsed, searched, reformatted, its data copied into a spreadsheet, and so on. Web scrapers typically take something out of a page, to make use of it for another purpose somewhere else.

Technical Challenges with Web Scraping¶

While we were extracting data from the database, we encountered a few technical challenges with Web Scraping from the website. There were challenges making programmatic requests on the website. Futhermore, pagination was also a particular issue. Since there were multiple pages in the database, it was challenging to put all the information in one, large dataset.

To resolve this issue, we found an article which explained our problem. The article provided us a solution. The article which helped us resolve our issue is: http://toddhayton.com/2015/05/04/scraping-aspnet-pages-with-ajax-pagination/

We read the article thoroughly and learned how to resolve this particular issue. We took the generalized code from the article and then adapted it to our module. This article helped us resolve our issue on pagination and let us Webscrape more thoroughly. As a result, we Webscraped from the multiple pages and created a single, large dataset of all Run-Away Slave Advertisements.

Our Web Scraper¶

The code for the Web Scraper was written by Todd Hayton and Greg Jansen. We first imported Python libraries: "re", "urlparse", and "mechanize". We also imported a library called "Beautiful Soup" which helped us with the main extraction of data. This library pulls data out of HTML and XML files. We then define the scrape function which is the primary function in this program which scrapes the data from the website. We start with the data extraction from the first page. After the program is done extracting the data from the first page, it moves to the second page, and so on. The program looks for the "Next Page" button on the webpage when it is finished extracting data from a page. The program keeps running until the "Next Page" button is not available. Once the data extraction is finished, all of the data is saved in one, large dataset.

The code below demonstrates how we Web Scraped from the Legacy of Slavery website.

Here is the code:

#!/usr/bin/env python

"""

Python script for scraping the results from http://slavery2.msa.maryland.gov/pages/Search.aspx?

Based on an informative article by Tom Hayton:

http://toddhayton.com/2015/05/04/scraping-aspnet-pages-with-ajax-pagination/

"""

__author__ = 'Greg Jansen'

__author__ = 'Todd Hayton'

import re

from urllib.parse import urlparse

import mechanize

from bs4 import BeautifulSoup

class LegacyScraper(object):

def __init__(self):

self.url = "http://slavery2.msa.maryland.gov/pages/Search.aspx?browse=run&page=1&pgsize=50&id=ArchivalResearch_LoSC_RunawayAds"

print(self.url)

self.br = mechanize.Browser()

self.br.addheaders = [('User-agent',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_6_8) AppleWebKit/535.7 (KHTML, like Gecko) Chrome/16.0.912.63 Safari/535.7')]

def scrape(self):

'''

Scrape all of the runaway slave ads

'''

self.br.open(self.url)

# GET DATABASE BROWSE LIST

raw = self.br.response().read()

s = BeautifulSoup(raw, "lxml")

saved_form = s.find('form', { "id": 'ctl00'}).prettify()

# SUBMIT FORM: RADIOBUTTON Click "Detailed"

for f in self.br.forms():

self.br.form = f

self.br.form.new_control('hidden', '__ASYNCPOST', {'value': 'true'})

self.br.form.new_control('hidden', 'ctl00$CenterContentPlaceHolder$ScriptManager1', {'value': 'ctl00$CenterContentPlaceHolder$UpdatePanel1|ctl00$CenterContentPlaceHolder$rblDisplayMode$2'})

self.br.form.fixup()

self.br.form.set_all_readonly(False)

rm = [ 'ctl00$CenterContentPlaceHolder$btnTabBasic',

'ctl00$CenterContentPlaceHolder$btnTabAdvanced',

'ctl00$CenterContentPlaceHolder$btnTabBrowse',

'ctl00$CenterContentPlaceHolder$btnTabDynamic',

'ctl00$CenterContentPlaceHolder$btnTabIndex',

'ctl00$CenterContentPlaceHolder$btnSubBasicSearch',

'ctl00$CenterContentPlaceHolder$btnSubBasicClear']

for ctl in rm:

ctl = self.br.form.find_control(ctl)

self.br.form.controls.remove(ctl)

self.br.form['__EVENTTARGET'] = 'ctl00$CenterContentPlaceHolder$rblDisplayMode$2'

self.br.form['__EVENTARGUMENT'] = ''

self.br.form['ctl00$CenterContentPlaceHolder$rblDisplayMode'] = ['detail']

print('\n'.join(['%s:%s (%s)' % (c.name,c.value,c.disabled) for c in self.br.form.controls if c.name != '__VIEWSTATE']))

self.br.submit()

pageno = 1

while True:

if pageno > 2:

break

resp = self.br.response().read()

f = lambda A, n=4: [A[i:i+n] for i in range(0, len(A), n)] # decodes XHR response

a = f(resp.split('|'))

content = None

view_state = None

for b in a:

if len(b) == 4:

if b[1] == 'updatePanel':

content = b[3]

if b[2] == '__VIEWSTATE':

print('GOT VIEWSTATE')

view_state = b[3]

self.save_page(content, pageno)

# Check if last page

s = BeautifulSoup(content, 'lxml')

next_button = s.find('input', { 'title': 'Next Grayed Out'})

if next_button is not None:

break

pageno += 1

# Regenerate form for next page

html = saved_form.encode('utf8')

resp = mechanize.make_response(html, [("Content-Type", "text/html")],

self.br.geturl(), 200, "OK")

self.br.set_response(resp)

form = None

for f in self.br.forms():

self.br.form = f

self.br.form.set_all_readonly(False)

event_target = 'ctl00$CenterContentPlaceHolder$UpdatePanel1|ctl00$CenterContentPlaceHolder$imgButtonNext'

self.br.form['__EVENTTARGET'] = event_target

self.br.form['__EVENTARGUMENT'] = ''

self.br.form['__VIEWSTATE'] = view_state

self.br.form.new_control('hidden', '__ASYNCPOST', {'value': 'true'})

self.br.form.new_control('hidden', 'ctl00$CenterContentPlaceHolder$ScriptManager1', {'value': 'ctl00$CenterContentPlaceHolder$UpdatePanel1|ctl00$CenterContentPlaceHolder$rblDisplayMode$2'})

self.br.form['ctl00$CenterContentPlaceHolder$rblDisplayMode'] = ['detail']

self.br.form['ctl00$CenterContentPlaceHolder$ddlPage'] = [ str(pageno) ]

self.br.form.fixup()

for ctl in rm:

ctl = self.br.form.find_control(ctl)

self.br.form.controls.remove(ctl)

print('\n'.join(['%s:%s (%s)' % (c.name,c.value,c.disabled) for c in self.br.form.controls if c.name != '__VIEWSTATE']))

self.br.submit()

def save_page(self, rawcontent, pageno):

###################################

# DATA SCRAPE/SAVE LOGIC GOES HERE

###################################

print('Page {0}'.format(pageno))

text_file = open("pages/page-{0}.html".format(pageno), "w")

text_file.write(rawcontent)

text_file.close()

if __name__ == '__main__':

scraper = LegacyScraper()

scraper.scrape()