Volumes 1-2 (1 Jan – 30 Aug 1934): “Gold and Silver”¶

by GH, JJ, RL Group Jellicle Cats

Data Overview, Telling Our Story¶

The datasets used in this analysis are part of the Morgenthau Press Conferences collection, held in the Franklin D. Roosevelt Presidential Library & Museum in Dutchess County, New York and available online on the library’s website. Volumes 1 and 2 of Series 2 in the collection, which covers the press conferences held by Secretary Morgenthau from January 1, 1934 to August 30, 1934, are available as digital copies online, where scans of conference transcripts can be viewed (JJ). The transcripts appear to have been typed with a typewriter, and scanned into the collection. The format mostly resembles a Question-and-Answer structure, with the Press posing questions, and the answer clearly noted. Treasury Secretary Henry Morgenthau is most often addressing these questions. Each volume is organized by page number and by date, starting with the earliest of the press conferences (GH).

We were able to find the most often discussed topics in these documents after altering the content to a format we could utilize for easier data input. The content primarily included topics discussing silver, gold, bonds, the treasury, and bills (GH). We were able to find the most discussed topics through use of the visualization models “Voyant Tools” and the graph function of Google Sheets. The rest of this document goes into detail regarding how we manipulated the data from Volume 1 and 2 of the Morgenthau Papers by utilizing OCR tools, ChatGPT, and careful proofreading in order to put an accurate copy of the text from the scanned document into an easy to utilize format in Google Sheets. (GH)

Henry Morgenthau was appointed Secretary of the Treasury by President Franklin D. Roosevelt in 1934. During his first year as Treasury Secretary, Morgenthau oversaw such developments as the Silver Purchase Act, the nationalization of silver, and the Gold Reserve Act, as well as the end of Prohibition and the regulatory necessities that occurred as a result. Morgenthau took his position five years after the crash that started the Great Depression, thus inheriting a host of financial problems with no easy solutions. In his second year as president, Roosevelt had already headed up a number of programs aimed at alleviating the financial stresses of the Depression, including the Civilian Conservation Corps (CCC), Public Works Administration (PWA), and the Civil Works Administration (CWA), as well as the creation of the Federal Emergency Relief Administration and the Agricultural Adjustment Administrations. These programs and administrations existed to help citizens and industries hit by the Depression (JJ).

In April of 1933, before Morgenthau’s tenure, Roosevelt issued Executive Order 6102, which prohibited the hoarding of gold (Richardson et al 2013). This was one step toward leaving the gold standard, which the United States did in April of that year (Samples 2022). In January 1934, Roosevelt signed into law the Gold Reserve Act, which devalued the dollar in relation to gold by 59 percent (Newton 2021). The Gold Reserve Act authorized the president to issue certificates against any silver in the Treasury, including foreign debt silver, according to the law at $1.29 (Gold Reserve Act, 1934) (JJ).

In July 1934, the Silver Purchase Act was passed, which intended to increase the proportion of silver in the U.S. monetary stocks to one-fourth of the total value. The act authorized Morgenthau to purchase silver “at home or abroad” under terms he “may deem reasonable” (Silver Purchase Act, 1934). Later, in August 1934, President Roosevelt issued Executive Order 6814: Requiring the Delivery of All Silver to the United States for Coinage, which nationalized silver in the United States (Exec. Order No. 6814, 1934). Morgenthau spoke extensively about this topic in August 1934, stressing that Eminent Domain was the power under which silver in the country at the moment could be seized (Morgenthau 1934) (JJ).

The research question was: What were Morgenthau’s top-spoken topics during the 1934 time period? In finding out the most common words and researching the historic context, the group was able to put the data they gathered into a comprehensive image to offer another means of navigating the collection. (GH)

Dataset Exploration related to Scale and Levels within Collection¶

To summarize, we took the available scans from The Morgenthau Press Conferences Volumes 1 and 2 and put them through an Optical Character Recognition (OCR) tool. We took the OCR text and sent it through ChatGPT with a prompt requesting it correct the errors. We took the text from ChatGPT and compared it side-by-side to the scanned transcripts and corrected any discrepancies to match the scan. We utilized an Excel document to help track this process and after putting the text through Voyant Tools we were able to see the frequency of each word and break the most frequent words down to form a line graph for visualization (GH).

For the future, we would recommend noting the most frequent subjects adding them alongside these documents, in order for future researchers to research these documents by topic, in addition to being able to find them by year (GH).

Data Cleaning and Preparation¶

At an initial Zoom meeting, the group divided up the work of the project, with RL taking charge of the initial data imports and text cleaning, GH taking charge of data manipulation and modeling, and JJ taking charge of documentation (RL).

To begin, RL downloaded the assigned folders, and used Adobe Acrobat to divide the documents into files smaller than 5 MB, small enough to be processed in OCRSpace. RL used OCRSpace’s OCR Engine 2 on each file, copying the generated text into a spreadsheet. RL manually divided the text from this spreadsheet into individual records for each press conference. From this point, RL performed a series of text transformations using both OpenRefine and Google Sheets. Those transformations made use of find-and-replace tools and regular expressions to normalize formatting and correct the most pervasive OCR errors (RL). RL made a video for her group-mates explaining what the cleaning process could look like.

Modeling: Computation and Transformation¶

At this point, RL enlisted JJ and GH to assist in cleaning the texts of the press conferences. This involved looking at the partially cleaned (but still error-ridden) text side-by-side with the original press conference transcripts, and editing the OCR text to match the original. This was a very time-intensive process, and the group had finished cleaning approximately a third of the OCR texts prior to a change in approach (RL).

After a class meeting during which Dr. Buchanan permitted the use of artificial intelligence tools in the text cleaning project, RL tested both Google Notebooks and ChatGPT and discovered the latter to be a useful tool for cleaning the OCR texts. The group considered the ethical implications of using an AI model for a project like this. In this case, the AI was not generating large amounts of information (unlike in the case of AI-generated art, which is often generated based off of dubiously-collected datasets), and was rather replacing a slow, repetitive task, so the group decided that the benefits outweighed the drawbacks, and moved forward with ChatGPT (RL).

RL provided the group with a useful text prompt for ChatGPT: “Correct OCR errors in the following text. Prioritize language used by a treasury secretary.” This text, followed by the OCR transcription of a press conference, would return a much cleaner version of the text. RL recorded another video for her group-mates on the new cleaning process, which sped up the work significantly. RL also built upon JJ’s notes on the process to build out a description of the work process. The group members then compared the generated text side-by-side with the original transcript, and corrected any remaining errors. The primary errors remaining after the pass through ChatGPT were in numbers and names, and were easily fixed. The group quickly finished cleaning the rest of the text (RL).

Modeling: Visualization¶

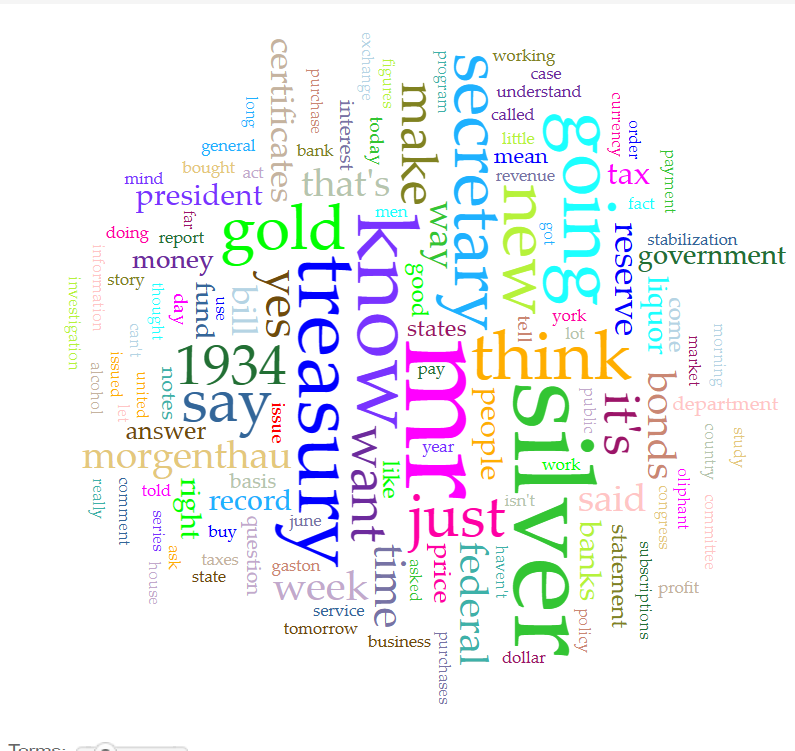

Once the text was clean, GH imported the spreadsheet into Voyant, in which GH was able to identify the most frequently used words in the dataset, and created a word cloud of the most commonly recurring words. Unfortunately, Voyant was unable to process the press conferences as individual records, so, in order to get a better sense of the changes in word frequency over time, GH returned to Google Sheets. GH modified a formula posted on SuperUser by user Variant (2010) in order to count the instances of a series of words and phrases across every press conference individually (RL). Figure 1 shows the Voyant Tools analysis of most frequent words in our dataset. One of the visualizations Voyant Tools offered was a word cloud that shows the frequency of each word’s presence in the document, exemplified by the size of the word. While not the most accurate visualization, it is helpful for an ‘at a glance’ understanding of the most relevant topics in the data sets (GH). Figure 2 shows the Voyant Tools word cloud of frequently used words in our dataset, with size corresponding to word frequency

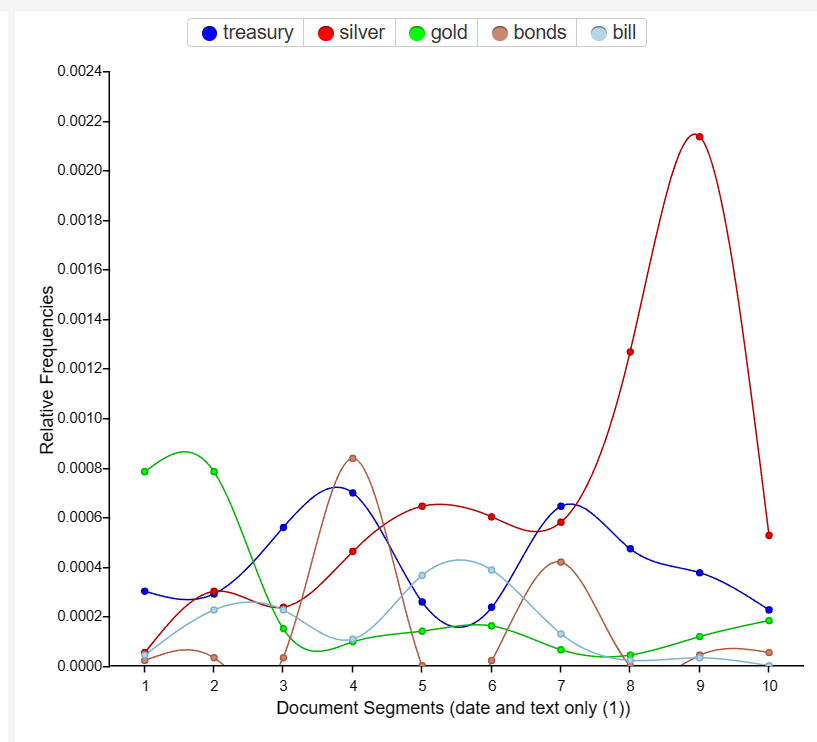

Voyant Tools was able to give a general visualization of word frequency over time after GH eliminated the articles and irrelevant words. The line graph was a preferred structure, but the tool was not able to look at the Excel document and place the dates in the x-axis in a comprehensive manner (Figure 3). The tool looked like it was intended to break a PDF document into segments, and thus automatically did the same to any files placed in it, regardless of document type (GH).

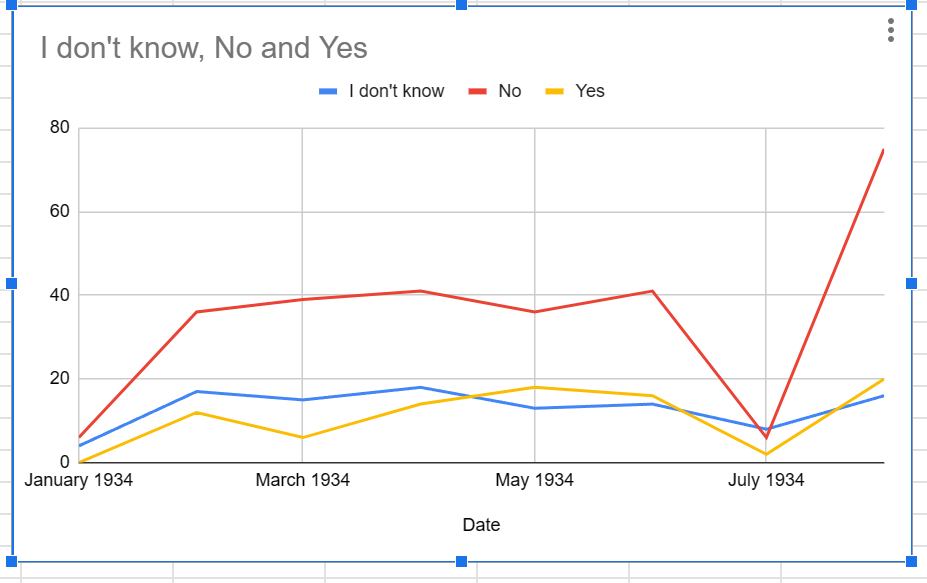

The group opted to take the frequent words from Voyant Tools and place them in Excel to create a graph with dates on the x-axis. Once GH had a set of words selected, RL manipulated the data in our Excel document to find how many occurrences of each word were present in each press conference, organized by date. GH took the dates and narrowed them down per month, at which point GH made a line graph tracking each occurrence of a word in each press conference on a monthly basis, from January of 1934 through August of 1934. GH then created a line graph showing some of the most common topics in the documents (GH). Figure 4 shows the Google Sheets graph of word frequency over time.

We found that “gold” was mentioned most frequently at the beginning of the dataset. Mentions of “silver” increased over time, rising near the end of the dataset (August 1934). The increase in mentions of “silver” in August 1934 is correlated to the passage of the Silver Purchase Act in June and Executive Order 6814 in August. Similarly, mentions of “gold” spiked near the beginning of the dataset (January 1934), correlating to the passage of the Gold Reserve Act passed in January 1934 (JJ).

Finally the group looked at the frequency of the phrases ‘yes’, ‘no’, and ‘I don’t know’, based on the frequency of the frank answer “I don’t know” by Mr. Morgenthau. The frank answers he gave demonstrated an interesting dynamic with the press that we hoped to exemplify with some data visualization and the line graph in Figure 5 (GH).

Ethics and Values Considerations¶

Since this was a scanned transcript, we felt it important to maintain the integrity of the document in as many ways as possible: maintaining any spelling or grammatical errors we encountered in the scans. We felt it important to not alter the grammatical errors in order to help capture the voice of Mr. Morgenthau and any Press members he was speaking to during the press conferences held (GH).

We were also aware of the potential ethical issues of utilizing AI and ChatGPT tools through this process. OCRSpace’s OCR Engine 2 was utilized to minimize the manual load on our group to transcribe months-worth of press conferences. We felt it important to comb through the OCR text to maintain accuracy. When we opted to utilize ChatGPT to try and speed up the process with our deadline, and while it did create less errors to correct, it did not minimize the amount we read through to ensure everything was transcribed just as we saw it written in the scans (GH).

Summary and Suggestions¶

Overall, we felt that the visual data supports the historic context, and that the collection could be a valuable research tool to anyone intending to focus on acts of the Treasury office during 1934 (GH).

References¶

- Exec. Order No. 6814, 33 F.R. 6814 (1934). https://www.federalregister.gov/citation/33-FR-6814

- Gold Reserve Act of 1934, H.R. 6976, Section 2 (1934). https://elischolar.library.yale.edu/ypfs-documents/11617/

- Morgenthau Jr., H. (1934) Press Conference-Mr. Morgenthau [Vol. 2 Transcript]. FDR Library Digital Collection. http://www.fdrlibrary.marist.edu/archives/collections/franklin/index.php?p=collections/findingaid&id=536

- Newton, P. (2021, November 3). “A Year in History: 1934 Timeline.” Historic Newspapers. March 17, 2024, https://www.historic-newspapers.com/blog/1934-historical-events/

- Richardson, G., Komai , A., & Gou, M. (2013, November 22). “Gold Reserve Act of 1934.” Federal Reserve History. https://www.federalreservehistory.org/essays/gold-reserve-act#:~:text=Among%20other%20things%2C%20the%20Act,from%20redeeming%20dollars%20for%20gold

- Samples. (2022, July 12). “A Year In History: 1933 Timeline.” Historic Newspapers. Retrieved March 17, 2024, https://www.historic-newspapers.com/blog/1933-timeline/

- Silver Purchase Act of 1934, H.R. 9745, Sections 2-3 (1934). https://fraser.stlouisfed.org/files/docs/historical/congressional/silver-purchase-act-1934.pdf

- [Treasury Department] (1934, August 25). “Each Bottle of Imported Liquor Must Have a Strip Stamp Before it is Allowed to Leave Customs.” St. Louis Fed. Retrieved March 17, 2024, https://fraser.stlouisfed.org/title/press-releases-united-states-department-treasury-6111/volume-12-586851/bottle-imported-liquor-must-a-strip-stamp-allowed-leave-customs-560440

- Variant. (2010). “Count substring occurrences within a cell.” Super User. https://superuser.com/questions/167113/count-substring-occurrences-within-a-cell