Step 1: A Framework for Unlocking and Linking WWII Japanese American Incarceration Biographical Data - Context Based Data Manipuation and Analysis Process- Part 1¶

Data manipuation is the process of fine tuning data to organize and prepare the data for research purposes. Data manipulation can include sorting, filtering, cleaning, normalizing, and joining separate datasets. Once the data has been prepared, data analysis can be perfomed. According to the Office of Research Integrity, "Data Analysis is the process of systematically applying statistical and/or logical techniques to describe and illustrate, condense and recap, and evaluate data." Below are the steps performed for data manipulation and analysis process using Python programming language on the Japanese American World War II datasets. The modules are split into two parts - Part 1 and Part 2.

Acquiring or Accessing the Data¶

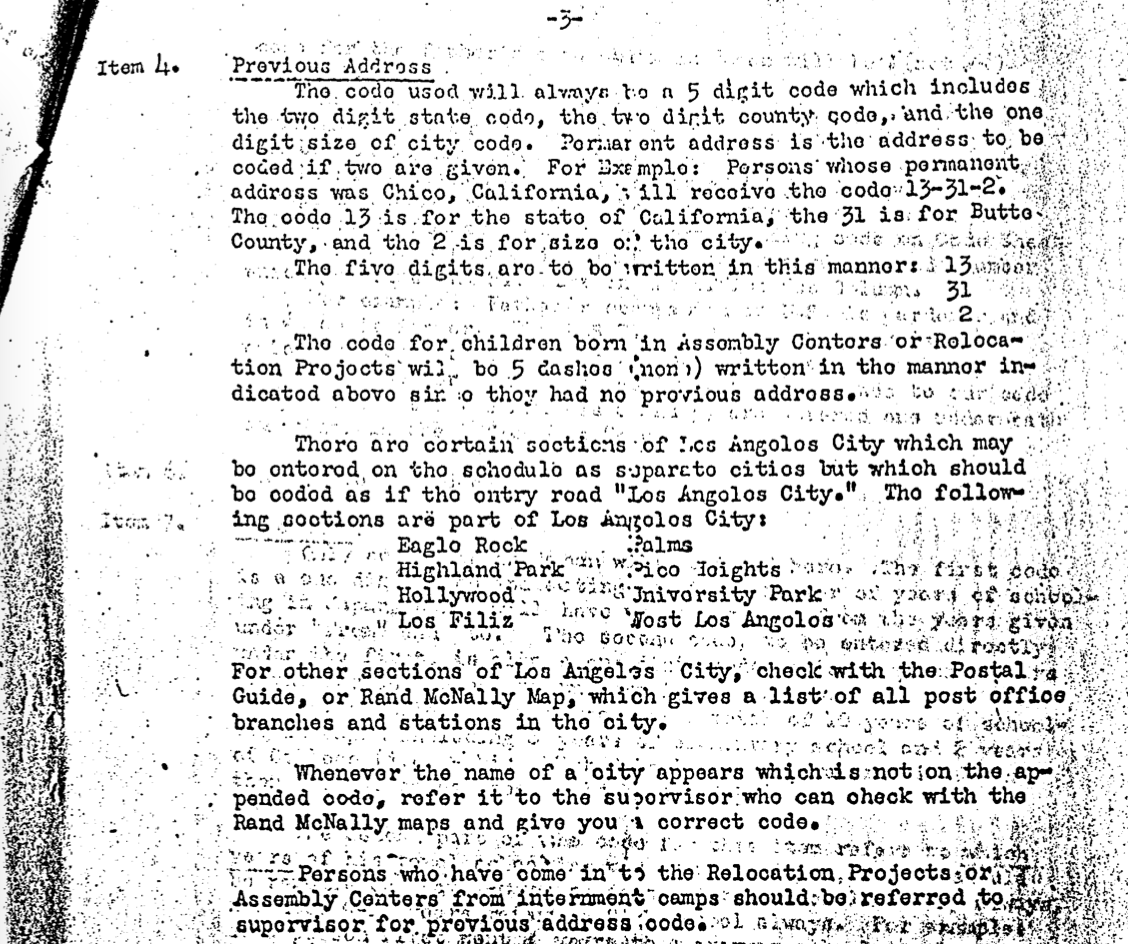

The data for this project comes from three disparate collections housed at the National Archives and Records Administration (NARA) this includes the War Relocation Authority (WRA), Final Accountability Roster (FAR), and Internal Security Case Reports, also referred to as Index Card or Incident Cards. The data sources are included in this module for the WRA and FAR as comma separated values (csv) files. The link below will direct you to those data files:

WRA [link] FAR [link] Incident Card upon request

The WRA dataset has X rows of data, the FAR has X rows, and the Incident Card has X rows.

To work with a csv file in Python, one of the first steps is to import a Python library called as 'pandas' which will help the program convert the csv file into a dataframe format or commonly called as a table format. We import the library into the program as shown below:

# Import libraries

# Pandas is used for data science/data analysis and machine learning tasks

import pandas as pd

# Numpy provides support for multi-dimensional arrays

import numpy as np

Using the pandas library, new dataframes can be created from the datasets imported into the notebook. Once the dataframes have been created from the imported files, the print() function is used to display the top first 5 rows in the dataframe.

# Creating a dataframe is essentially creating a table-like data structure that can read csv files, flat files, and other delimited data.

# Converting input data into a dataframe is the starting point with Python programming language for big data analytics

# Below command reads in the WRA, FAR, and Incident card datasets. The WRA and FAR should already be loaded in a folder called 'Datasets' wra_cleanup-09-26-2021.csv and Copy-far_cleanup-09-26-2021.csv

wradf = pd.read_csv('wra_cleanup-09-26-2021.csv',dtype=object,na_values=[],keep_default_na=False)

fardf = pd.read_csv('far_cleanup-09-26-2021.csv',dtype=object,na_values=[],keep_default_na=False)

incidentdf = pd.read_csv('incidentdfv1.csv',dtype=object,na_values=[],keep_default_na=False)

# Below command prints the first 5 records of the Incident Cards after the data is copied from the csv file

incidentdf.head(5)

--------------------------------------------------------------------------- FileNotFoundError Traceback (most recent call last) Cell In [2], line 5 1 # Creating a dataframe is essentially creating a table-like data structure that can read csv files, flat files, and other delimited data. 2 # Converting input data into a dataframe is the starting point with Python programming language for big data analytics 3 # Below command reads in the WRA, FAR, and Incident card datasets. The WRA and FAR should already be loaded in a folder called 'Datasets' wra_cleanup-09-26-2021.csv and Copy-far_cleanup-09-26-2021.csv 4 wradf = pd.read_csv('wra_cleanup-09-26-2021.csv',dtype=object,na_values=[],keep_default_na=False) ----> 5 fardf = pd.read_csv('far_cleanup-09-26-2021.csv',dtype=object,na_values=[],keep_default_na=False) 6 incidentdf = pd.read_csv('incidentdfv1.csv',dtype=object,na_values=[],keep_default_na=False) 8 # Below command prints the first 5 records of the Incident Cards after the data is copied from the csv file File ~/.local/lib/python3.10/site-packages/pandas/io/parsers/readers.py:948, in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, date_format, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, encoding_errors, dialect, on_bad_lines, delim_whitespace, low_memory, memory_map, float_precision, storage_options, dtype_backend) 935 kwds_defaults = _refine_defaults_read( 936 dialect, 937 delimiter, (...) 944 dtype_backend=dtype_backend, 945 ) 946 kwds.update(kwds_defaults) --> 948 return _read(filepath_or_buffer, kwds) File ~/.local/lib/python3.10/site-packages/pandas/io/parsers/readers.py:611, in _read(filepath_or_buffer, kwds) 608 _validate_names(kwds.get("names", None)) 610 # Create the parser. --> 611 parser = TextFileReader(filepath_or_buffer, **kwds) 613 if chunksize or iterator: 614 return parser File ~/.local/lib/python3.10/site-packages/pandas/io/parsers/readers.py:1448, in TextFileReader.__init__(self, f, engine, **kwds) 1445 self.options["has_index_names"] = kwds["has_index_names"] 1447 self.handles: IOHandles | None = None -> 1448 self._engine = self._make_engine(f, self.engine) File ~/.local/lib/python3.10/site-packages/pandas/io/parsers/readers.py:1705, in TextFileReader._make_engine(self, f, engine) 1703 if "b" not in mode: 1704 mode += "b" -> 1705 self.handles = get_handle( 1706 f, 1707 mode, 1708 encoding=self.options.get("encoding", None), 1709 compression=self.options.get("compression", None), 1710 memory_map=self.options.get("memory_map", False), 1711 is_text=is_text, 1712 errors=self.options.get("encoding_errors", "strict"), 1713 storage_options=self.options.get("storage_options", None), 1714 ) 1715 assert self.handles is not None 1716 f = self.handles.handle File ~/.local/lib/python3.10/site-packages/pandas/io/common.py:863, in get_handle(path_or_buf, mode, encoding, compression, memory_map, is_text, errors, storage_options) 858 elif isinstance(handle, str): 859 # Check whether the filename is to be opened in binary mode. 860 # Binary mode does not support 'encoding' and 'newline'. 861 if ioargs.encoding and "b" not in ioargs.mode: 862 # Encoding --> 863 handle = open( 864 handle, 865 ioargs.mode, 866 encoding=ioargs.encoding, 867 errors=errors, 868 newline="", 869 ) 870 else: 871 # Binary mode 872 handle = open(handle, ioargs.mode) FileNotFoundError: [Errno 2] No such file or directory: 'far_cleanup-09-26-2021.csv'

Below are the variables in the Incident Cards that were chosen to be cleaned and manipulated for use in the following steps.

Offense -- This indicates the offenses assigned to Japanese Americans by government officials Case # -- A case number was assigned according to the type of offense Date -- This indicates the date the offense took place Residence -- The location of where that specific individual resided Other -- This indicates additional notes that were recorded by officials on the Index, "Incident Cards" First Name -- The first name of the incarcerated Japanese American Last Name -- The last name of the incarcerated Japanese American

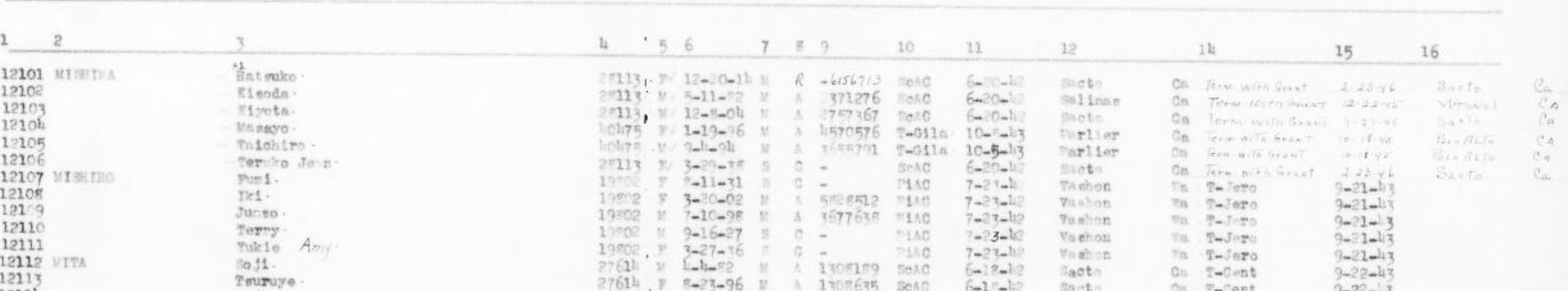

Below are the variables in the WRA and FAR that were chosen to be cleaned and manipulated for use in the following steps. First Name -- The first name of the incarcerated Japanese American Last Name -- The last name of the incarcerated Japanese American Family Number -- A number assigned to families upon entry into the assembly centers

We anticipated errors and misinterpretation of names, numbers, etc. in the data since these databases were either transcribed manually or physical copies digitized using OCR techniques. We approached this by individually exploring and cleaning the selected columns using the text and numerical operation functions in Python. To begin, we looked at the datasets holistically to identify variables that would allow for us to generate meaningful stories and visualizations. After a list of variables were confirmed, we analyzed each of them in detail to document bad data and eliminate them if possible, modify data types, exclude them from the final visualizations if found to be invalid, etc.

This project involved team members with diverse backgrounds and knowledge in the technical, historical, and archival domains. The project presented opportunties for collaboration and individual work. We decided it was more productive to have a hybrid approach where we analyzed the data on our own and created sub-projects and reported our results and progress back to the group for discussion. With respect to the analysis performed on the dataset, decisions were data-driven or historical facts driven.

Through researching the literature, conversations with community partner Geoff Froh, from Densho, and experts in the field, the team members were able to follow steps where specific variables were identified and shared among the entire group for their inputs before finalizing the results.

Case Number (Issue of Incident Card)¶

Through many conversations and pulling in our differing expertise we were able to develop ideas that let us integrate a range of methods and techniques to exhibit multifaceted perspectives of incarcerated Japanese Americans during World War II. Out of these discussions one of the many sub projects that developed was Spatial Analysis of incarcerated Japanese Americans recorded as being invovled in the uprising ('riot') at Tule Lake on November 4, 1943.

Additionally, much of our discussion was also centered on how the data should be analyzed, presented, and shared without impacting the sensitivity of the people involved, especially since this set of collection is unique.

One of the variables conisdered for this project was case number. This field indicates the case number assigned to offenses that occured at Tule Lake. Due to the case number being entered on the index cards by different government personnel the formatting was inconsistent. There were a number of issues with the case number field in the original dataset. Examples of the variations of case number formats includes 'A7-'; ‘A-7 ’; A-7- also have 'A-7-P' also have 'A-7-p'; 'A-7p' Also have 'A-7P'; ‘A-7,’; 'A7,'.

# The below command prints out the descriptive details of the column 'casenumber'

incidentdf["casenumber"].describe()

count 15748 unique 4475 top freq 680 Name: casenumber, dtype: object

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

# The below command displays an array of unique date values in the 'casenumber' column

incidentdf["casenumber"].unique()

array(['A-607', 'A7-P138', 'A-7 Page 1465', ..., '#477', 'A-7 Page 1393',

'A-7-P180'], dtype=object)

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

Indentifying Cases of 'Riot'¶

As can be seen from above, there are varying formats for case number, some have dashes, others have a lowercase or a capital 'P', and some are missing the letter but have the word 'Page', etc. To identify and capture all of the variations of case number we can use regular expressions within the contains() function as shown below:

# Apply regex will help us find all those variations

caseno = incidentdf[incidentdf['casenumber'].str.contains("A[-\s]?7([p,?-p\s-].*)?$", na=False)]

/opt/conda/lib/python3.8/site-packages/pandas/core/strings/accessor.py:101: UserWarning: This pattern has match groups. To actually get the groups, use str.extract. return func(self, *args, **kwargs)

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

# The below command prints out the number of times these variations appear within the dataframe.

caseno['casenumber'].unique

<bound method Series.unique of 1 A7-P138

2 A-7 Page 1465

14 A-7-P284

20 A-7 Page 543

26 A-7-P284

...

15742 A-7 Page 1380

15743 A-7 Page 1376

15744 A-7 Page 1377

15745 A-7-P180

15746 A-7-P95

Name: casenumber, Length: 3850, dtype: object>

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

As can be seen above there are, give or take, about 3,850 cases associated with 'riot' that meet the regular expression criteria used to capture several variations.

**Note, this number does not necesarily mean we captured all of the case numbers linked to 'riot',. Since the data was manually entered on the index cards by government personnel it is likely there are errors and some cards tied to 'riot' are not captured in this variation.

Another alternative for looking for cards linked to 'riot' is to search through the offense column. As shown below.

# Search through the 'newoffense' column using contains function

noffriot = incidentdf[incidentdf['newoffense'].str.contains('riot')]

noffriot.count()

Image# 3872 newdate 3872 casenumber 3872 offense 3872 newoffense 3872 DEATH 3872 name 3872 lastname 3872 firstname 3872 Other Names (known as) 3872 Job/Role/Title 3872 Residence 3872 NEW-residence 3872 X 3872 Y 3872 otherpeople 3872 Age 3872 Family / Alien No. 3872 Other 3872 Unnamed: 19 3872 dtype: int64

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

After performing the filter, the output of cards linked to 'riot' is higher than that of the case number column. Let's apply the unique function to view what unique values the contains function captured when 'riot' was searched for in the new offense column.

# the unique function will return unique values in a series aka column.

noffriot['newoffense'].unique()

array(['riot'], dtype=object)

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

As shown above other values that contain the word 'riot' like 'disorderly conduct false riot call' and 'assualt attempted riot' are also returned. Though these cases may be associated with the 'riot' that occured on 11/4/1944 we want to focus on cases that only contain 'riot'.

Regular expressions can also be applied here to filter and only return values that contain 'riot'. Regular expressions are a sequence of characters, that can sometimes comprise of several characters, used to search through strings for patterns. Though regular expressions are helpful and a powerful tool, they can also be challenging as some characters have to be in a specific order. Here are a few helpful tools to introduce regular expressions: Regular Expressions HOWTO, pythex.org, How to use Regex in Pandas, AutoRegex.

Below we use the ^ regular expression to return values where 'riot' is at the start of the string. By simply using this character we have reduced the number of values returned which more closely aligns with the number of cases linked to 'riot'.

# The ^ is used to search for values at the start of a string.

noffriot = incidentdf[incidentdf['newoffense'].str.contains('^riot')]

noffriot.count()

Image# 3860 newdate 3860 casenumber 3860 offense 3860 newoffense 3860 DEATH 3860 name 3860 lastname 3860 firstname 3860 Other Names (known as) 3860 Job/Role/Title 3860 Residence 3860 NEW-residence 3860 X 3860 Y 3860 otherpeople 3860 Age 3860 Family / Alien No. 3860 Other 3860 Unnamed: 19 3860 dtype: int64

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

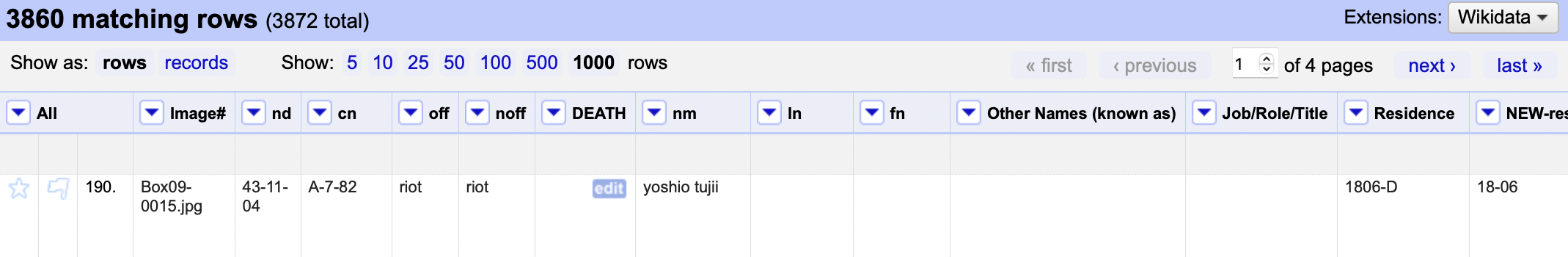

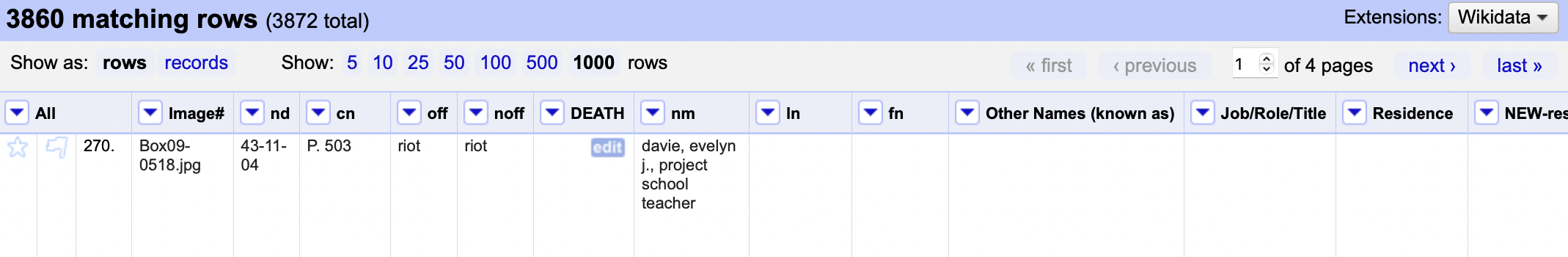

To further explore the differences in the results of 'riot' in the new offense and the case number column we can use OpenRefine. OpenRefine will allow us to quickly view, filter, cluster and confirm the accuracy of case numbers associated with 'riot'.

In the instance seen below, the difference is a result of the case number being inncorrectly entered or designated at the initial time of entry. **NOTE: It is unclear whether this error is an outcome of the initial data entry or a result of the data being manually entered from the index cards to a spreadsheet.

More time could be spent refining the regular expression string used to search through the case numbers, but this is a good opportunity to practice using regular expressions on your own.

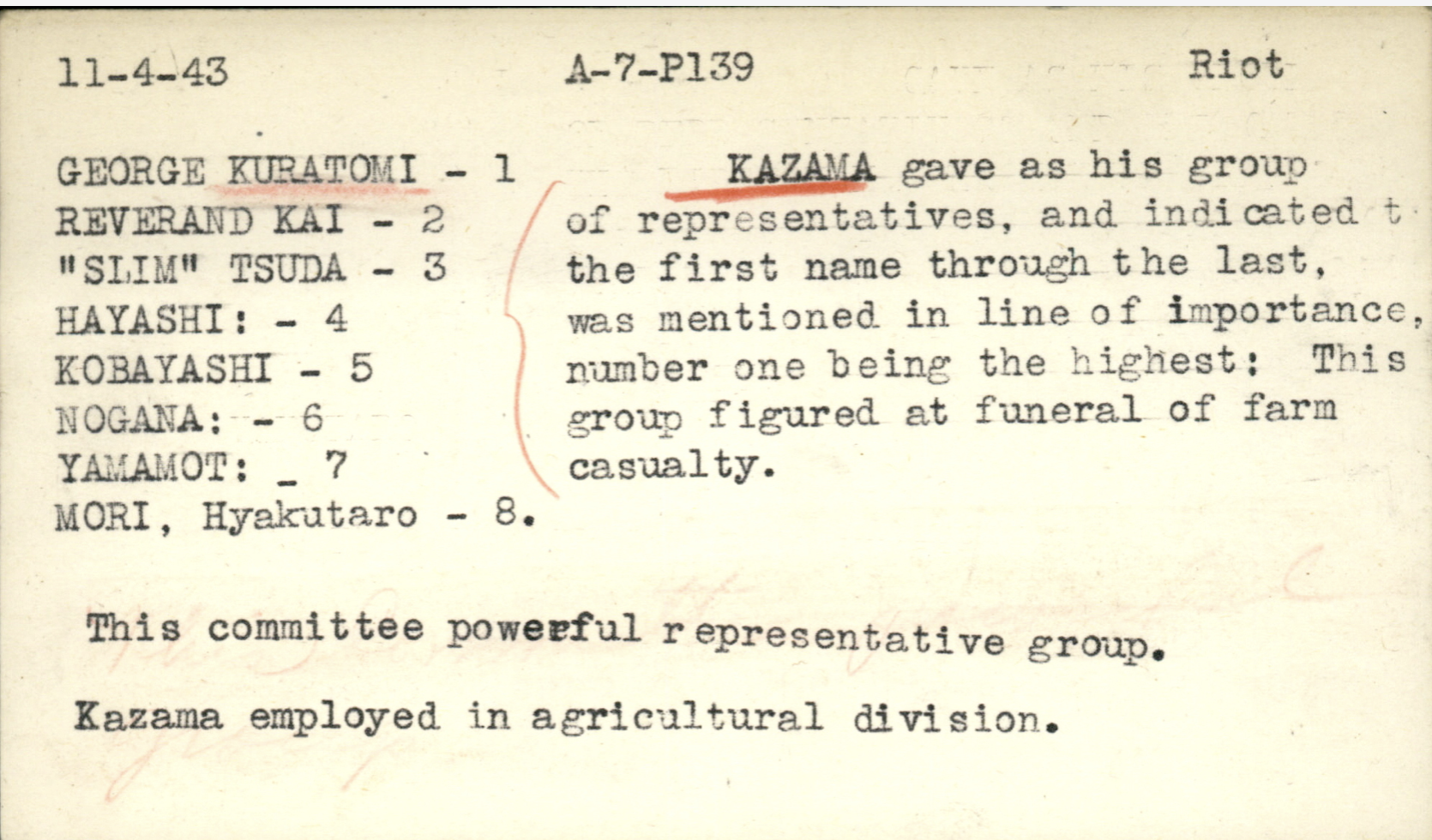

Discovering Influential Figures in the 'Riot'¶

Once the incident card data has been fine tuned and reflects cases only related to 'riot' we can then begin exploring the dataset to discover individuals who's names appears the most among the 'riot' cards. The decision was made to begin this project by focusing on the key figures invovled in the 'riot' with the possiblility of expanding and applying these methods to a larger dataset.

The case number series that was filtered using the contains function earlier in the process will be used to identify the individuals recorded as being part of the 'riot'. As a reminder, when the data was filtered, caseno was assigned to that new output so we know that we are working specifically with data associated with case numbers linked to 'riot'. By applying values count to the caseno series we can identify these key or influential figures.

The values count function will return objects, in descending order, that contain counts of unique values. So the first element will always be the most frequently occuring element, as shown below:

# The below command returns count values of the names in the caseno series

riotcount = caseno['name'].value_counts()

riotcount.head(30)

kuratomi 58 kobayashi 40 kai 36 tom yoshio kobayashi 23 "b" area 22 tsuda 20 kobayashi, tom yoshio 20 rev. kai 19 yamane, tokio 18 tom kobayashi 17 tokio yamane 16 mori 16 george kuratomi 15 "b" area report 15 tokunaga 15 kazama 14 kuratomi, george 14 terada, singer 14 oshita, tomoji 14 kazama, tomio 14 shigenobu murakimi 13 yoshiyama, tom 13 isamu uchida 13 oki, kakuma 12 yamauchi, dr. 11 saito, shu 11 oscar itani 11 abe, tetsuo 11 tetsuo abe 11 nakao, masaru 11 Name: name, dtype: int64

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

As shown above, the name Kuratomi appears the most frequently among the 'riot' incident cards. Following, Kuratomi is Kobayashi, Kai, Tom Yoshio Kobayashi, Tsuda, Reverend Kai, and Tokio Yamane, to name a few.

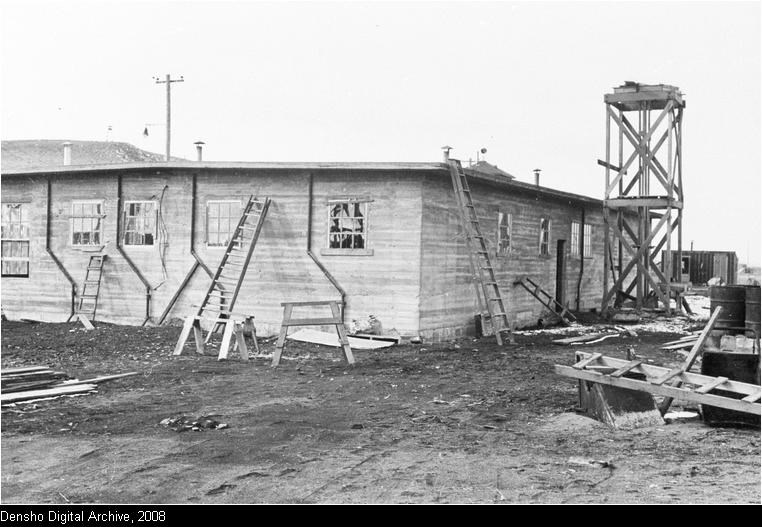

Also recorded in this count is "b" area or "b" area report. Area B is a significant location in Tule Lake. After the 'riot' of November 4, suspected "troublemakers" were picked up--it is recorded around 209 in November and 107 in December--and placed in "Area B" also known as the "Surveillance Area" and later designated as the "Stockade". The barracks underwent 24 hour surveillance by armed soldiers from watchtowers, were searched almost daily for "contraband", visitors were not permitted except by permission from the Tule Lake Director, and curfew was set from 7:00 P.M. to 6:00 A.M. The stockade was situated northwest of the hospital for the entire period of its existence, November 5, 1943, to August 24, 1944, and enclosed by a high wire fence.

From the output above, we'll take 25 of the individuals listed and explore their geographical spaces in more detail; one of those being, Kuratomi.

Now that we've organized our data and identified key figures, we are prepared to begin gathering geographic information. All three datasets--the WRA, FAR, and Incident Cards-- will be used to identify different geographical spaces, specifically the WRA will be helpful for pinpointing places of origin before being forced to an assembly center as well as the location of the first assembly center, the FAR will be useful for identifying forced migration to internal and external camp locations, and the Incident Cards will be helpful for locating where individuals lived during incarceration.

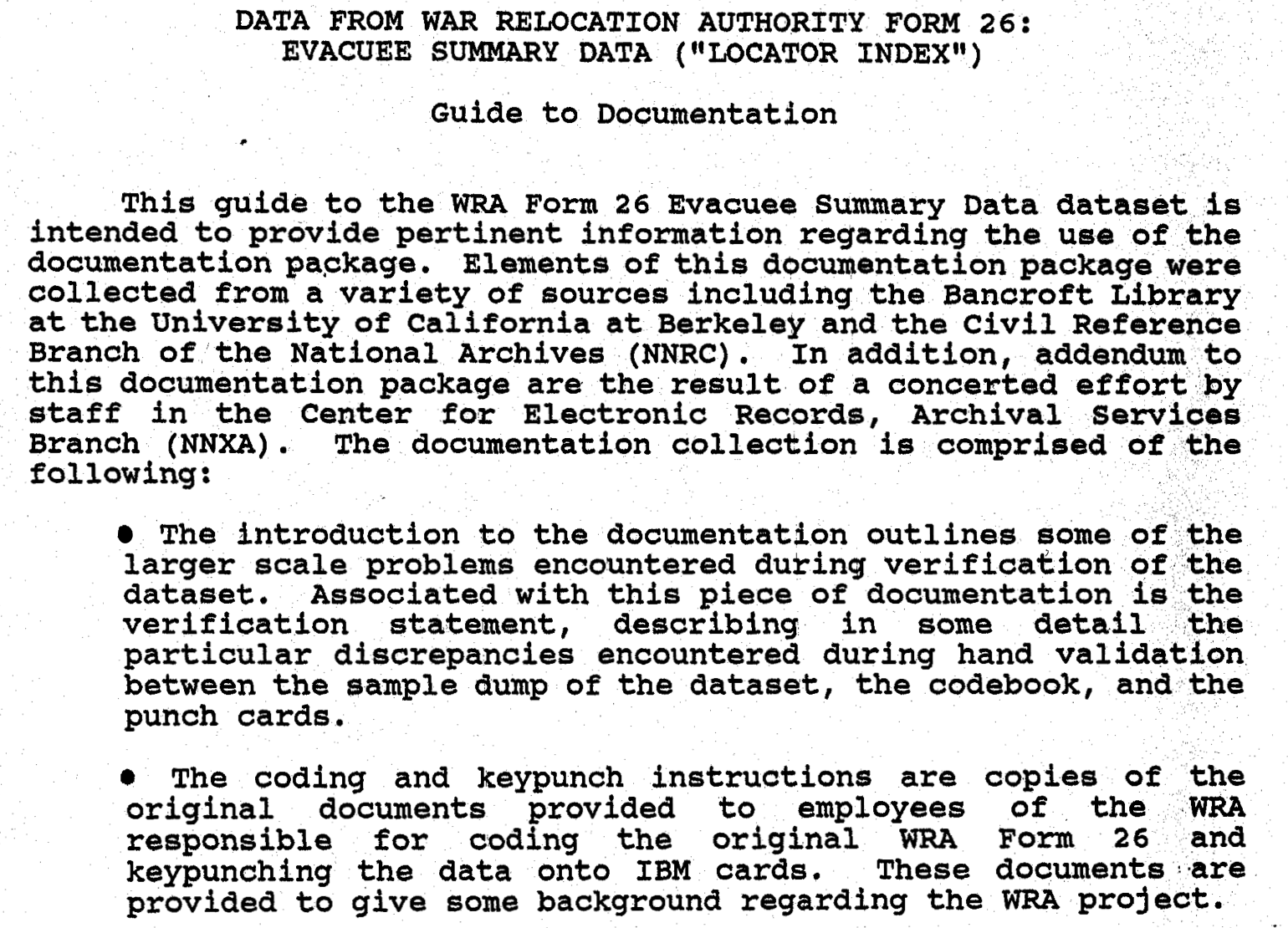

The “Japanese-American Internee Data File, 1942 – 1946”, with camp intake records of evacuated Japanese-Americans, also known as WRA Form 26 is one of the major federal records associated with the War Relocation Authority (WRA), the agency established to handle the forced relocation and detention of Japanese-Americans during World War II. At the time, clerks recorded people’s information on the WRA Form 26 when brought into assembly centers or camps, afterwards, 34 fields were encoded onto punch cards. Later on the National Archives and Records Administration (NARA) digitized these punch cards and permenantly houses these records. The WRA data files are publically available.

The “Final Accountability Rosters (FAR) of Evacuees at Relocation Centers, 1944-1946, records the camp outtake of evacuees at the time of their final release or transfer. During this time, clerks recorded outtake information on individuals as they exited camps. It's important to note that some individuals exited multiple camps due to forced transfers and have more than one FAR record. The FAR records were later transcribed by Densho, Ancestry.com, among others.

The Index Cards, also called, Incident Cards are Internal Security Case Reports” index cards, from the War Relocation Authority (Record Group 210 from 1941-47) records series. The Incident Cards reference narrative reports prepared by camp investigators, police officers, and directors of internal security, relating cases of alleged “disorderly conduct, rioting, seditious behavior,” etc. at each of the 10 camps, with detailed information on the names and addresses in the camps of the persons involved, the time and place where the alleged incident occurred, an account of what happened, and a statement of action taken by the investigating officer. There are 25,045 index cards, 63% of which (15,648) come from the Tule Lake concentration camp.

Finding Names in the Incident Cards, WRA, & FAR¶

Though this data is rich with information the WRA, FAR, and Incident Cards present unique challenges for us. For example, at times it was difficult to determine the order of a persons first and last name as well as many of the names are similiar to one another with one or two characters being different. Keeping this in mind, we have to be conscientious we are looking at the correct individual(s). A method for doing this is comparing information across the three separate data files like age, residence, family, individual or alien registration number, or using details found in the Other column. At other times we found that information was incomplete, for example, the WRA has truncated last names, leaving us to deduce that we were looking at the correct person after comparing the previously mentioned fields.

As shown previously, we will follow a similiar process by using the contains function to locate data on Kuratomi. Because I am familiar with the data, I know Kuratomi's first name is George--a good practice to get in the habit of is to become familiar with the data you are working with before manipulating and analyzing it.

By performing a simple contains search, as shown below, on the name column we are supplied with Kuratomi's residence, including other valueable information like his age, other individuals linked to Kuratomi, case numbers, as well as notes that are recorded on that specific index card which can be helpful for identifying this is correct person.

By looking at the table below, under New-residence we find the the location of George's barrack, 6605-E. The address can be interpreted in the following manner, 66 as the block, 05 as the ward and E as the assigned barrack.

# The contains function will return values with the element 'George Kuratomi'

incidentdf[incidentdf['name'].str.contains("george kuratomi", na=False)]

| Image# | newdate | casenumber | offense | newoffense | DEATH | name | lastname | firstname | Other Names (known as) | Job/Role/Title | Residence | NEW-residence | X | Y | otherpeople | Age | Family / Alien No. | Other | Unnamed: 19 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2338 | Box10-0217.jpg | 43-11-04 | A-7-P417 | riot | riot | george kuratomi | Note smuggled out on one of prisoners being re... | |||||||||||||

| 2366 | Box10-0245.jpg | 43-11-04 | A-7-P139 | riot | riot | george kuratomi | 30 | Chairman, Age 30 years, Came from Jerome. List... | ||||||||||||

| 2368 | Box10-0247.jpg | 43-11-04 | A-7-P92 | riot | riot | george kuratomi | 6605-E | 66-05 | 635285.2772 | 4638923.446 | Youth leader from Jerome - given as possible l... | |||||||||

| 2369 | Box10-0248.jpg | 43-11-04 | A-7P89 | riot | riot | george kuratomi | One of names listed on "Approved Segregation C... | |||||||||||||

| 5358 | Box12-0840.jpg | 43-11-04 | A-7-P139 | riot | riot | george kuratomi -1 | kuratomi | george | reverend kai -2, "slim" tsuda - 3, hayashi -4,... | Kazama gave as his group of representatives, a... | ||||||||||

| 6326 | Box13-0614.jpg | 43-11-04 | A-7-P404 | riot | riot | george kuratomi | YOSHITOMO HONDA (Alien) 3714-D 21896\n\nSent f... | |||||||||||||

| 6328 | Box13-0616.jpg | 43-11-04 | A-7-P402 | riot | riot | george kuratomi | Chairman of meeting at Mess Hall #15 on 11/4/4... | |||||||||||||

| 6334 | Box13-0622.jpg | 43-11-04 | A-7-P373 | riot | riot | george kuratomi | TAKAHASHI\n\nMentioned during questioning of G... | |||||||||||||

| 6335 | Box13-0623.jpg | 43-11-04 | A-7-P377 | riot | riot | george kuratomi | UCHIDA\n\nName brought up during questioning o... | |||||||||||||

| 6336 | Box13-0624.jpg | 43-11-04 | A-7-P373 | riot | riot | george kuratomi | TOM KOBAYASHI\n\nMentioned during questioning ... | |||||||||||||

| 6337 | Box13-0625.jpg | 43-11-04 | riot | riot | george kuratomi | MITS\n\nName brought up during questioning of ... | ||||||||||||||

| 6338 | Box13-0626.jpg | 43-11-04 | A-7-P375 | riot | riot | george kuratomi | SUGIMOTO\n\nName brought up during questioning... | |||||||||||||

| 6339 | Box13-0627.jpg | 43-11-04 | A-7-P390 | riot | riot | george kuratomi | MR. KOMIYA\n\nMr. Komiya is the Japanese secre... | |||||||||||||

| 6376 | Box13-0664.jpg | 43-11-04 | A-7-P89 | riot | riot | george kuratomi | One of names listed on "Approved Segregation C... | |||||||||||||

| 6408 | Box13-0696.jpg | 43-11-04 | A-7-P417 | riot | riot | george kuratomi | GEORGE\n\nNote smuggled out on one of prisoner... | |||||||||||||

| 6417 | Box13-0705.jpg | 43-11-04 | A-7-P139 | riot | riot | george kuratomi | 30 | Listed as first in importance by KAZAMA in con... | ||||||||||||

| 6418 | Box13-0706.jpg | 43-11-04 | A-7-P92 | riot | riot | george kuratomi | 6605-E | 66-05 | 635285.2772 | 4638923.446 | Yout leader from Jerome - given as possible le... | |||||||||

| 6421 | Box13-0709.jpg | 43-11-04 | A-7-P286 | riot | riot | george kuratomi, chairman | f.d. amat, consult of the spanish embassy / h.... | The above were present at meeting at Dining Ha... |

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

The .iloc is used to view the details of the notes in the Other column. The .iloc function is an integer position based function, meaning you can use indexing for selection of a particular position or cell and row within a table (dataset or also commonly called an array).

# The below function returns information the cell with the index of 6334, 18

incidentdf.iloc[5358,18]

"TAKAHASHI\n\nMentioned during questioning of Geroge Kuratomi on 12/3/43 in connection with Kashima's funeral."

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

As with the Incident Card data, performing the contains function allows us to extract values containing Kuratomi.

Demonstrated below, George Kuratomi, can be found in the second row of the WRA datafile. Similiar to the Incident Cards, the WRA provides personal information that can be used to confirm we are looking at the correct person. To view additional details of a row, the .iloc function can be applied here as well. In the table below we can extract the following geographic information for Kuratomi: original state, assembly center, and first camp. This data will be helpful later to find the latitute and longitude coordinates for these separate locations.

# The contains function will return results with Kuratomi.

wradf[wradf['NEW-m_lastname'].str.contains("KURATOMI", na=False)].head(5)

| m_dataset | m_pseudoid | m_camp | m_lastname | NEW-m_lastname | m_firstname | NEW-m_firstname | m_birthyear | NEW-m_birthyear | m_gender | ... | w_citizenshipstatus | w_highestgrade | w_language | w_religion | w_occupqual1 | w_occupqual2 | w_occupqual3 | w_occuppotn1 | w_occuppotn2 | w_filenumber | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 40132 | wra-master | 2-poston_kuratomi_1934_akiko | 2-poston | KURATOMI | KURATOMI | AKIKO | AKIKO | 1934 | 1934 | F | ... | Did not attend Japanese Lang. School: Does not... | No schooling or kindergarten [J] | Not applicable (11 years old and under) | Buddhist [1] | 200060 | |||||

| 40133 | wra-master | 6-jerome_kuratomi_1915_george | 6-jerome | KURATOMI | KURATOMI | GEORGE | GEORGE | 1915 | 1915 | M | ... | Did not attend Japanese Lang. School: Has SS #... | In U.S.: High School 4 [I] | Japanese speak, read (& write), English speak,... | Buddhist [1] | 71 | 170 | 27 | 6 | 956857 | |

| 40134 | wra-master | 6-jerome_kuratomi_1897_hide | 6-jerome | KURATOMI | KURATOMI | HIDE | HIDE | 1897 | 1897 | F | ... | Did not attend Japanese Lang. School: Has AR #... | In Japan: High School 4 [D] | Japanese speak, read (& write), English speak | Buddhist [1] | 625 | 614 | 952839 | |||

| 40135 | wra-master | 6-jerome_kuratomi_1923_ikuko | 6-jerome | KURATOMI | KURATOMI | IKUKO | IKUKO | 1923 | 1923 | F | ... | Did not attend Japanese Lang. School: Has neit... | In U.S.: College 1 [5] | Japanese speak and English speak, read (& write) | No religion, undecided, none, atheist, agnosti... | 125 | 4 | 952840 | |||

| 40136 | wra-master | 6-jerome_kuratomi_1884_iwami | 6-jerome | KURATOMI | KURATOMI | IWAMI | IWAMI | 1884 | 1884 | M | ... | Did not attend Japanese Lang. School: Has both... | In Japan: High School 2 [B] | Japanese speak, read (& write), English speak,... | Baptist [A] | 72 | 73 | 303 | 433 | 953282 |

5 rows × 46 columns

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

# Apply the .iloc to a row index to view all information within that given row

wradf.iloc[40133]

m_dataset wra-master m_pseudoid 6-jerome_kuratomi_1915_george m_camp 6-jerome m_lastname KURATOMI NEW-m_lastname KURATOMI m_firstname GEORGE NEW-m_firstname GEORGE m_birthyear 1915 NEW-m_birthyear 1915 m_gender M m_originalstate CA m_familyno 4119 NEW-m_familyno 4119 m_familyno_normal 4119 NEW-m_familyno_normal 4119 m_individualno 04119A m_altfamilyid m_altindividualid m_ddrreference m_notes w_assemblycenter Santa Anita w_originaladdress San Diego, California [13&14] w_birthcountry Father-Japan, Mother-Japan [1] w_fatheroccupus Unskilled laborers (except farm) [9] w_fatheroccupabr Unknown [&] w_yearsschooljapan 7 w_gradejapan 1 - 8 yrs. of school only [1] w_schooldegree None [0] w_yearofusarrival w_timeinjapan 5 yrs. but less than 10 yrs. in Japan [4] w_notimesinjapan w_ageinjapan Between ages 0-9 and also 10-19 and also 20 an... w_militaryservice No Military or Naval Service, No Public Assist... w_maritalstatus Single w_ethnicity Japanese, No spouse [4] w_birthplace California w_citizenshipstatus Did not attend Japanese Lang. School: Has SS #... w_highestgrade In U.S.: High School 4 [I] w_language Japanese speak, read (& write), English speak,... w_religion Buddhist [1] w_occupqual1 71 w_occupqual2 170 w_occupqual3 w_occuppotn1 27 w_occuppotn2 6 w_filenumber 956857 Name: 40133, dtype: object

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

Lastly, we will look through the FAR to locate exit locations for George Kuratomi. Exit locations include forced movements from one camp to another throughout incarceration.

A quick scan of the table below, we find that George Kuratomi doesn't appear among the listed names, this is because 'George' is an evangelical name used by Toshio Kuratomi. This was a phenomena discovered by simply becoming familiar with the data. In fact, George was not the only individual to use an evangelical name during incarceration. Now that we are equipped with this information, this will require us to do further investigation so we are careful not to rule out a person because their doesnt immediately appear in a dataset. It may end up being the case here where an alternative name is used.

# Apply the contains function to return results for Kuratomi

fardf[fardf['NEW-last_name_corrected'].str.contains("Kuratomi", na=False)]

| FAR Exit Dataset | original_order | far_line_id | family_number | NEW-family_number | last_name_corrected | NEW-last_name_corrected | last_name_original | first_name_corrected | first_name_original | ... | type_of_final_departure | NEW-type_of_final_departure | date_of_final_departure | final_departure_state | camp_address_original | camp_address_block | camp_address_barracks | camp_address_room | reference | notes | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 16101 | granada1 | 3820 | 3713 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Hide | Hide | ... | Ind-Invit | Ind-Invit | 7/26/44 0:00 | CO | ||||||

| 16102 | jerome1 | 3856 | 3811 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Hide | Hide | ... | T-T | T | 6/19/44 0:00 | |||||||

| 16103 | jerome1 | 3857 | 3812 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Ikuko | Ikuko | ... | Ind-Invit | Ind-Invit | 6/1/44 0:00 | IL | ||||||

| 16104 | granada1 | 3819 | 3712 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Masako | Masako | ... | Ind-Invit | Ind-Invit | 7/26/44 0:00 | CO | ||||||

| 16105 | jerome1 | 3855 | 3810 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Masako | Masako | ... | T-T | T | 6/19/44 0:00 | |||||||

| 16106 | granada1 | 3818 | 3711 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Rintaro | Rintaro | ... | Ind-Invit | Ind-Invit | 7/26/44 0:00 | CO | ||||||

| 16107 | jerome1 | 3858 | 3813 | 4091 | 4091 | Kuratomi | Kuratomi | Kuratomi | Rintaro | Rintaro | ... | T-T | T | 6/19/44 0:00 | |||||||

| 16255 | jerome1 | 3859 | 3814 | 4119 | 4119 | Kuratomi | Kuratomi | Kuratomi | Toshio | Toshio | ... | T-S | T-S | 9/26/43 0:00 | |||||||

| 16256 | tulelake1 | 10726 | 10251 | 4119 | 4119 | Kuratomi | Kuratomi | Kuratomi | Utako | Utako | ... | Term-with Grant | Term-With Grant | 1/10/46 0:00 | PA | (Nee Terada - 1685) | |||||

| 16257 | tulelake1 | 10727 | 10252 | 4119 | 4119 | Kuratomi | Kuratomi | Kuratomi | Yuri | Yuri | ... | Term-with Grant | Term-With Grant | 1/10/46 0:00 | PA | ||||||

| 38724 | poston1 | 7304 | 41 | 10033 | 10033 | Kuratomi | Kuratomi | Kuratomi | Akiko | Akiko | ... | Term W-G | Term-With Grant | 1/16/45 0:00 | CO | 28 | 11 | A | |||

| 38725 | poston1 | 7303 | 40 | 10033 | 10033 | Kuratomi | Kuratomi | Kuratomi | Charlotte | Charlotte | ... | Term W-G | Term-With Grant | 1/16/45 0:00 | CO | 28 | 11 | A | |||

| 38726 | poston1 | 7302 | 39 | 10033 | 10033 | Kuratomi | Kuratomi | Kuratomi | Masato | Masato | ... | Ind-Empl | Ind-Empl | 12/6/44 0:00 | CO | 28 | 11 | A | |||

| 38727 | poston1 | 7305 | 42 | 10033 | 10033 | Kuratomi | Kuratomi | Kuratomi | Tatsuto | Tatsuto | ... | Term W-G | Term-With Grant | 1/16/45 0:00 | CO | 28 | 11 | A | |||

| 142361 | rohwer1 | 4581 | 4477 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Iwami | Iwami | ... | Term W-G | Term-With Grant | 8/31/45 0:00 | NY | 6-8-C | 6 | 8 | C | ||

| 142362 | jerome1 | 3851 | 3806 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Iwami | Iwami | ... | T-T | T | 6/30/44 0:00 | |||||||

| 142363 | rohwer1 | 4582 | 4478 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Ray | Ray | ... | Ind-Empl | Ind-Empl | 9/5/44 0:00 | IL | 6-8-C | 6 | 8 | C | ||

| 142364 | jerome1 | 3853 | 3808 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Ray | Ray | ... | T-T | T | 6/30/44 0:00 | |||||||

| 142365 | rohwer1 | 4583 | 4479 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Sadaco | Sadaco | ... | Term W-G | Term-With Grant | 8/31/45 0:00 | NY | 6-8-C | 6 | 8 | C | ||

| 142366 | jerome1 | 3854 | 3809 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Sadako | Sadako | ... | T-T | T | 6/10/44 0:00 | |||||||

| 142367 | rohwer1 | 4584 | 4480 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Tsuyoka | Tsuyoka | ... | Term W-G | Term-With Grant | 8/31/45 0:00 | NY | 6-8-C | 6 | 8 | C | ||

| 142368 | jerome1 | 3852 | 3807 | 11-178 | 11-178 | Kuratomi | Kuratomi | Kuratomi | Tsuyoka | Tsuyoka | ... | T-T | T | 6/10/44 0:00 |

22 rows × 36 columns

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

# Apply the .iloc function to view additional information in index (row) below

fardf.iloc[16255]

FAR Exit Dataset jerome1 original_order 3859 far_line_id 3814 family_number 4119 NEW-family_number 4119 last_name_corrected Kuratomi NEW-last_name_corrected Kuratomi last_name_original Kuratomi first_name_corrected Toshio first_name_original Toshio NEW-first_name_corrected Toshio other_names George NEW-other_names George date_of_birth NEW-date_of_birth year_of_birth NEW-year_of_birth sex M NEW_marital_status S citizenship C alien_registration_no. type_of_original_entry SAAC NEW-type_of_original_entry SaAC NEW-pre-evacuation_address San Diego pre-evacuation_state CA date_of_original_entry 10/30/42 0:00 type_of_final_departure T-S NEW-type_of_final_departure T-S date_of_final_departure 9/26/43 0:00 final_departure_state camp_address_original camp_address_block camp_address_barracks camp_address_room reference notes Name: 16255, dtype: object

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

In this first module, I have shown how to use Pythons contains function to manipulate and analyze specific data within datasets. We harnessed the power of regular expressions to return different variations of values that were inconsisently entered, inlcuding limiting the contains function to return particular values. We identified key figures involved in the 'riot' at Tule Lake by using the counts value function. Futhermore, we located the place of origin, assembly center, first camp location, and place of residence at Tule Lake for one of the many persons invovled in the 'riot' that took place at Tule Lake on November 4, 1944.

In the following module, we will focus on how to use the geographical data collected here to construct datasets that will allow us model and visualize the movements of 25 selected individuals.

# The below command let's us save the modified dataframes into a new output csv file.

# This can be useful when using these files for further steps of processing.

caseno.to_csv('casenodf.csv', index=False)

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

References¶

[1] Photograph; Tule Lake, California. Tule Lake Historic Jail Rehabilitation Project Public Review. . .; Densho Digital Archive; Found on the National Park Service, Tule Lake. [https://www.nps.gov/tule/getinvolved/tule-lake-historic-jail-rehabilitation-project-public-review.htm, May 31, 2018]

Notebooks¶

The below module is organized into a sequential set of Python Notebooks that allows us to interact with the collections related to the Framework for Unlocking and Linking WWII Japanese American Incarceration Biographical Data to explore, clean, prepare, visualize and analyze it from historical context perspective.

- A Framework for Unlocking and Linking WWII Japanese American Incarceration Biographical Data - Context Based Data Manipulation and Analysis - Part 2

- A Framework for Unlocking and Linking WWII Japanese American Incarceration Biographical Data - Data Visualization

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

notebook controller is DISPOSED. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.